Web crawling platform for information retrieval

A web crawler, also known as web spider, is a computer program that automatically and methodically visits websites and retrieves their content and information. After conducting the information retrieval, this information is then indexed and stored in a database. While web crawlers are most often associated with search engines, improving search experience for users, they can be used for a variety of purposes, such as data mining, website monitoring, and content analysis. To build our custom web crawling software, we decided to use .NET/C# as our programming language, due to the wide range of libraries and tools available for web crawling and data processing in C#, as well as the ability to easily integrate with other technologies such as databases and web frameworks.

Challenge

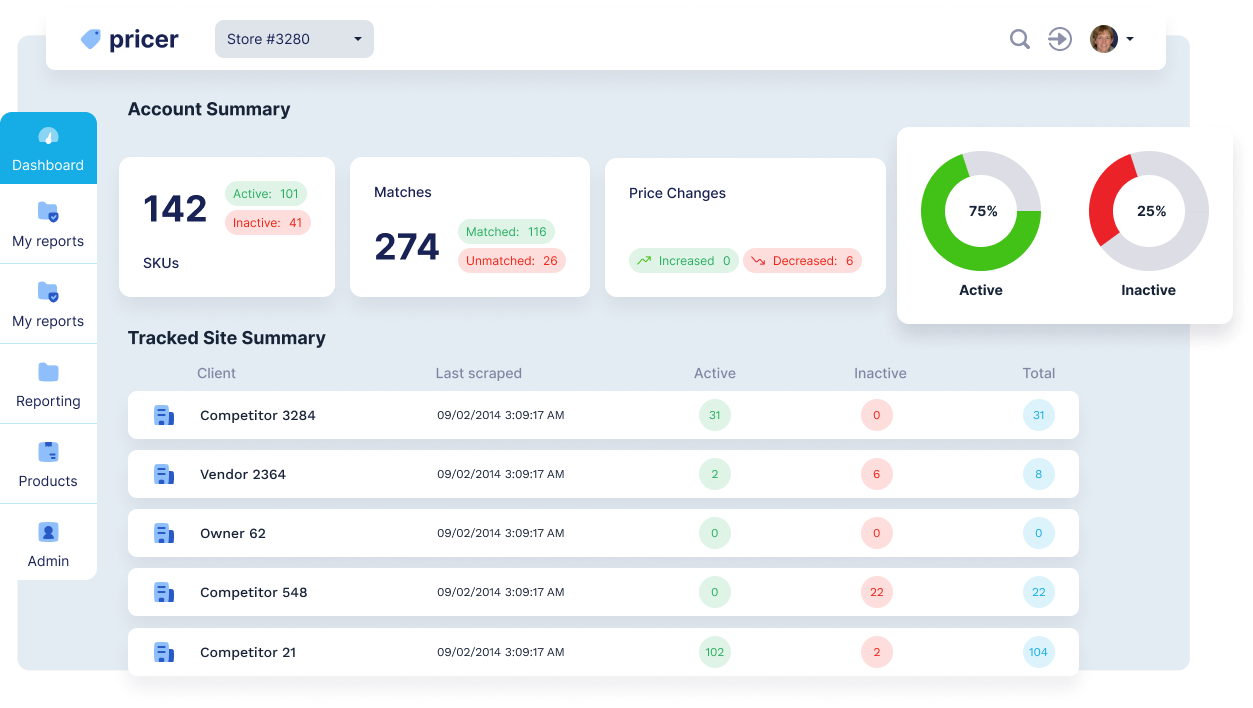

Our web crawling platform was designed for the purpose of data mining. Its final goal was to collect millions of web pages from hundreds of different websites and to extract relevant data from them on a monthly basis to provide users with a comparative shopping service and experience.

The quantity of pages that need to be retrieved and analyzed for this purpose ranged from several hundred to several million per website. Some sites are crawled on a regular basis, while others are only crawled once. However, in some cases, it can be done under a very strict deadline.

The original approach for meeting such demands included development of a dedicated app for each of the target websites. Initially, it did not rely on any of the modern cloud platforms, so there was a need to deal with a large payload by splitting it and running multiple instances of the same app on many different machines, each processing its own part. As time went on, it became challenging to maintain all of those apps from both the development and maintenance perspective. The problems we had to deal with were the constant changes in the structure of crawled websites, updates of apps needed in order to keep track with technology trends, writing new apps in the most efficient and optimized way, and the constant need for reconfiguring how and where they run.

All of this merged into an idea of developing a highly scalable generic website crawling solution to run any kind of web crawling process – or, rather, any kind of workflow – so that processing, transforming and storing of the collected data was also covered. Additionally, we had to improve the data crawling logic for some of the problematic websites which required constant workflow changes and manual interaction due to their advanced bot protection. Finally, we had to find a way for the new solution to be flexible to the constantly-changing load and deadline demands.

Requirements

Generic architecture

The first challenge was to abstract and standardize the way users define what the system needs to do for all the different crawling processes. The core logic may be described as the following:

- Fetch the content of a web page (via some sort of http get logic)

- Extract the desired parts of the content (most commonly via some sort of xpath logic)

- Do something with the extracted data - e.g. store it to the db or to a file

- Do all the above in a loop for a number of pages

The idea was to pack the logic of common functionalities like these into tasks, which users can then invoke and define their website specific behavior via different properties on those tasks. The most challenging part was figuring out how to present this to the users. We had to come up with some user-friendly solution so that people are able to define, view and understand complex workflows as simply and as fast as possible.

Advanced crawling tasks

A real-life web-crawling scenario may be much more complex than a simplified view given above. More and more websites introduce various crawling protection methods. We were using rotating proxies, mimicking human behavior via JavaScript, as well as some other techniques (which we also needed to pack into tasks). However, there are some sites out there which are so good at detecting bots, that nothing except running them in the browser helps. This is why we needed to assess the best headless browser solution and incorporate it into our logic.

Horizontal scaling

The crucial project requirement was that the crawling solution is able to automatically scale the work to multiple machines.

What this meant was that we had to come up with some kind of a cluster solution in a cloud. For a number of workflows, for which the processing time is not important and which are scheduled to run at different times, the cluster would dynamically expand and contract based on load demands. For the important ones, which are under a strict deadline, one may need to run a dedicated cluster with specified scaling parameters, calculated so that they could be completed in time.

Solution

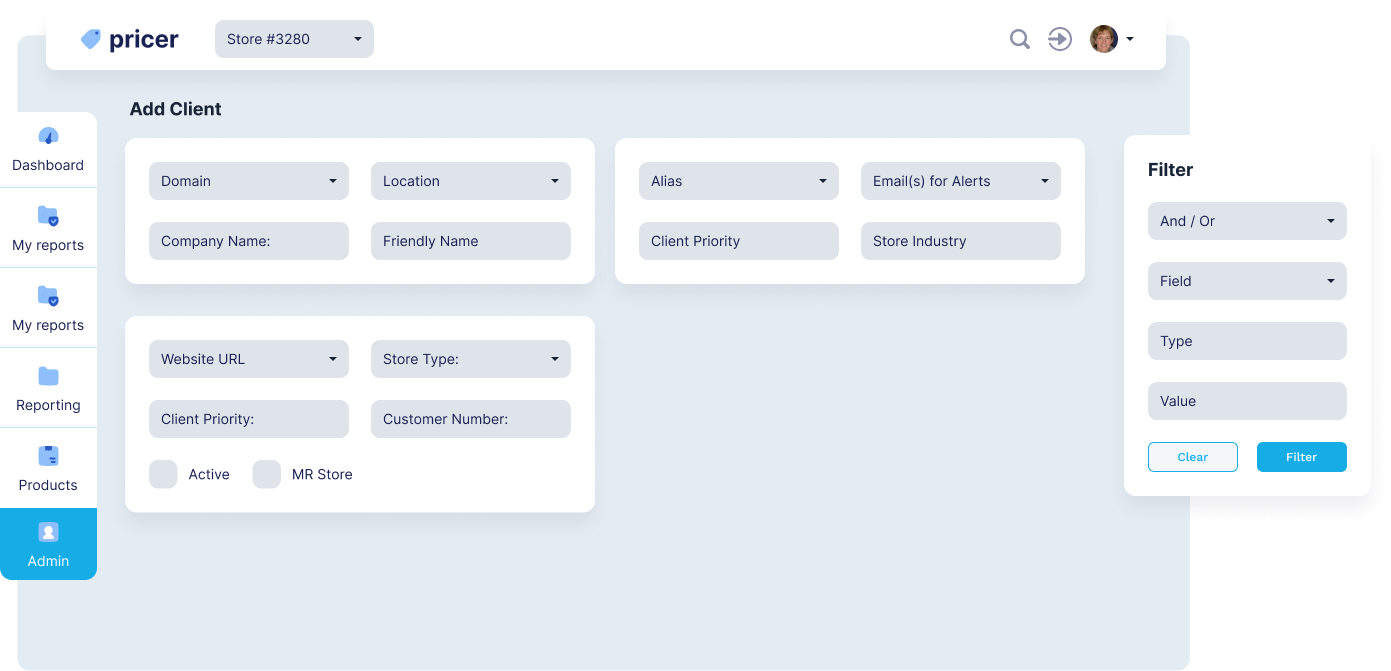

With its core requirements of being able to handle custom logic and website bot protection, being suitable for inexperienced users and with the ability of horizontal scaling, the generic web crawling workflow system we had to build was the first such system on the market. There are currently no commercial solutions that meet all of these criteria and we aimed at building the best price comparison site.

We managed to implement a generic solution by describing workflows via XML, having a library of packed tasks able to run that XML and implementing custom tasks scripting via Roslyn compiler platform. Advanced web crawling tasks and bot protection were done via CEF - Chromium Embedded Framework. Horizontal scaling was implemented by modeling the entire application architecture with the Akka.NET actor model framework and using its built-in cluster capabilities. Docker and Kubernetes were used for running the application parts in the cloud environment.

Technical details

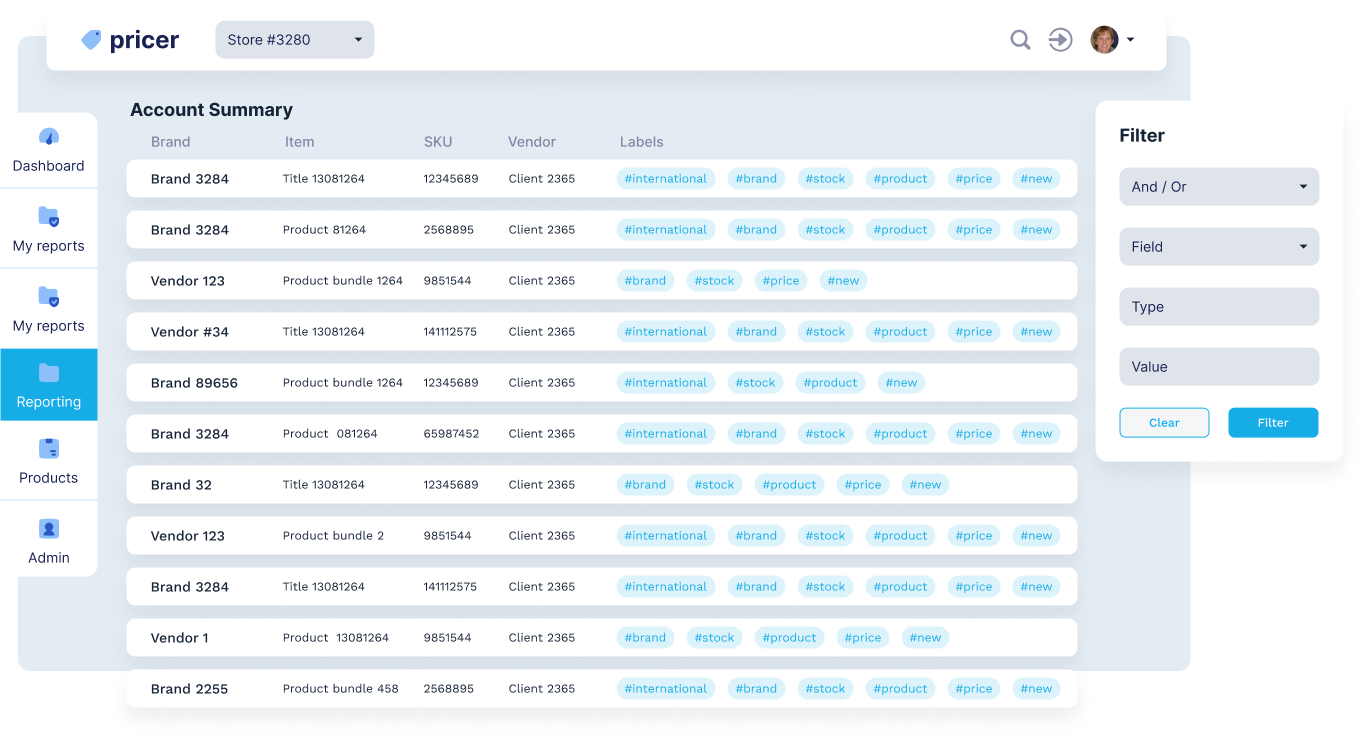

Defining workflows via XML

One of the initial demands was to try to produce a user interface, one that could make the system usable by someone technically savvy, but not necessarily an experienced software developer. We looked at commercial tools like Mozenda for ideas, and developed a flowchart-like user interface in ReactJS and .NET web api, with the ability to drag and drop tasks and define their properties visually. Furthermore, we created a class library workflow model on the backend and were able to transform it to/from our frontend. We were also able to serialize/deserialize it to/from a database.

After testing such a solution for a while, it turned out that really complex workflows with hundreds of tasks (i.e. most of our client's workflows) were simply too complex to comprehend, search through and change in such a visual manner. Another problem was that the frequent changes were hard to track and revert – it seemed as though we needed a source control system for the stored workflows.

Since we used XML for serialization, it occurred to us that using a decent XML-friendly text editor and working directly on the xml made everyone more productive. Simple file-as-a-workflow definition was also suitable for source control. Hence, we opted for such a simpler, and yet more effective solution, while completely separating the logic of designing workflows from running them.

Expression Engine

As workflows grew in complexity, our XMLs needed full support of almost anything one might do in a programming language. We packed complex functionalities to tasks, but there were many custom math operations or data transformations that simply couldn't be defined in such a generic manner. We realized that we needed the ability to write small code snippets and use them to accomplish any kind of custom functionality, so we searched for an expression engine capable of parsing and running custom code.

As our workflow was actually a script of a sort, with variables defined dynamically on the fly, we thought the most suitable scripting language would be JavaScript. Since our code was written in .NET, our first solution was Microsoft's .NET implementation of a JavaScript V8 engine – ClearScript. However, this implementation was used only at the beginning, as we later realized that it had major performance problems.

Looking further, we decided to try the Microsoft's .NET Compiler Platform Roslyn and run our scripts in C#. Once we made a switch to this, we never looked back since the performance was much better than with all the other solutions we tried. There is an initial compilation penalty, but we reduced it by caching each of our snippets as dynamically generated assembly on disk, so, from there, we loaded it on every repeated run. This gave us the final ingredient for writing workflows with any kind of custom logic.

One may wonder why all that XML & C# scripting effort. Why not just pack the major functionalities as a class library and then have programmers write custom apps or modules which use that? Well, there are a number of reasons why that would not be a good choice.

The first, and the most important reason, is that it was simply hard to enforce the discipline to the developer-users of the app and make them not introduce their own modified versions of standard tasks, which would result in us having a maintenance and, possibly, a performance mess.

Second, the xml scripts for simpler workflows were written by other roles, not strictly developers

The third reason was simplicity. Although it would be possible to write a custom library module per each workflow, recompile it on every change and plug it into the main platform for running, it was way more complex than just changing a simple script file and re-running it.

CEF as headless browser

A large part of our development time was dedicated to the headless browser. We had previous experiences with Phantom and Selenium, but neither of them were able to cover all the required scenarios and neither had an organized code base ready to be used across the board. We had previously used CEF - Chromium Embedded Framework on another project to render complex graphics in a desktop application, and had a positive experience with it. Having Chromium at its base and being actively developed for years, with a large community, it seemed to be reliable and to have great potential. We followed the guidelines on how to run it in headless (or, more precisely, windowless) mode and packed everything we could think of into scriptable tasks – creating/destroying an instance, loading a url, getting html from current DOM, setting a proxy, setting/clearing cookies, executing JavaScript, etc.

We used Xilium.CefGlue .NET CEF implementation as that gave us a de-facto 1:1 mapping to the native C++ CEF code comparing to the more user friendly and a less of a learning curve CefSharp. What also made it a better choice is the fact that we were able to do our own custom CEF build and upgrade to newer versions more frequently.

Finally, with the emergence of .NET core, we wanted to run isolated crawling processes on a number of available Linux machines, so we had to do a custom Linux CEF build and integrate it to our app.

Actor framework for distributing work

While looking into how to do the horizontal scaling in the best way, we choose the Actor Model. Reading through some resources, like Distributed Systems Done Right: Embracing the Actor Model, helped us decide that this was the right architecture for us. It was later proved that it was the right decision, not only due to the built-in scaling and cluster support, but also in terms of how it handles concurrency problems (e.g. different parts of workflow doing conflicting actions at the same time) and fault tolerance (the situation in which one of the workflow worker parts becomes inaccessible on the network or fails due to an error).

Using .NET as core technology, the choice was between two actor framework solutions - Microsoft's Orleans and Akka.NET, a port of the Akka framework from Java/Scala. We spent some time evaluating both of them and chose Akka.Net, despite its slightly higher learning curve, but due to it being more open, customizable and closer to the original actor model philosophy.

Cluster environment was our primary goal, but as we went along with the implementation, we realized that there were problems we simply cannot solve without the actor model. These problems are inherent to the nature of distributed systems. For example, as our workflow was parallelized, there was a need to pause, restart or cancel the whole workflow, either by manual action from the user interface or by the workflow logic itself in one of the parallel iterations. On a single machine, one could use various locking techniques to synchronize behavior of parallel executions, but, in a distributed system, all of that changes.

Akka, as well as other actor models, is designed to deal with such problems. Having every logical unit-of-work part defined as a single actor responsible for handling it, it was easy to deal with concurrency, since messages (i.e. commands) are always processed one at a time. The workflow control problem was solved by having a single runner actor, which responded to the pause, restart or cancel messages one at a time, notifying other (distributed) parts/actors of the same workflow, and not proceeding further with message processing until making sure they completed their part.

Cluster environment

We dockerized all of our application parts and ran it in Kubernetes, which provided the adaptive load balancing ability, i.e. cluster elasticity. This was not without challenges, as we had to make all our actors persistent and resilient to both cluster expansion and contraction. At any point in time, they had to be able to terminate the work on one machine and re-emerge with the same restored state on another one. This was done via Akka cluster sharding, but we couldn't solve the headless browser part in the same way, as it was simply impossible to save and restore its state. Thus, we had to implement the browser part as a separate micro service, with the ability to scale out to new machines via regular Akka cluster routing, but without the contraction, which had to be controlled manually.

The client also had a number of physical machines available, which were still running legacy crawling apps, but were underused. We were able to run a direct, static cluster environment on them too. There was no cluster elasticity in this case and the work distribution was done via adaptive loaded balancing provided by the Akka cluster metrics module.

Conclusion

In summary, the web crawling platform was developed to efficiently collect and process large amounts of data from various websites for the purpose of building a custom solution for a comparison shopping platform. A complex system that it is, while looking for the solution, we tried several approaches. A scalable and generic solution was developed to handle any kind of workflow, including advanced crawling tasks and website bot protection. The solution was also designed to be flexible to changing load and deadline demands and to be user-friendly for both technical and non-technical personnel. To meet these requirements, the platform was built with a generic architecture, incorporating headless browser technology, and the ability to scale horizontally. As we successfully improved upon some of our original approaches, and learned along the development of the solution, the end result was a successful platform that met the needs of the project.

Book a free consultation

Let us know what would you like to do. We will probably have some ideas on how to do it.